Celeste Vallance, Elizabeth Franklin and Libor Coufal are members of the Digital Preservation team at the National Library of Australia

Over the past ten years, the National Library of Australia has worked on establishing a holistic and systematic digital preservation program. With all but one of our end-to-end digital preservation workflow steps now in place and running as business-as-usual, work has recently started on implementing the final stage: enhancing access to digital collection material and facilitating its long-term preservation.

Support for complex digital publications

One of the products of our BAU processes is a ‘level of support’ report that lists file formats in our collection, whether we can support access to them, and how. The next logical step, and a starting point for this work, is prioritising and addressing those that we currently cannot support.

From this list, the Digital Preservation team zeroed in on offline HTML publications as first candidates for attention. These publications are complex digital objects that may contain thousands of interconnected files in a myriad of file formats. Finding standalone support pathways for each individual format is not the appropriate way to approach the support problem in this case. Our question was, how can we render and deliver the individual publications as complete entities, using our current digital preservation architecture?

The problem

Our policy for processing digital carriers is to copy the files, unless it breaks the functionality of the content. In the case of the offline HTML publications, copying the files from the CD-ROM doesn’t break the functionality. However, our digital preservation system, Preservica, cannot cope with the complex interconnections between the files. While it can render many of the formats in an HTML publication, it cannot reconstitute the original functionality and viewing experience. The only workaround, until now, involved manually exporting content from Preservica and adding it to reading room computers on a case-by-case basis-- a time-consuming, non-scalable and unsustainable approach.

The solution

A few years ago, Preservica introduced new web-archiving functionality to enable harvesting websites in standard web archiving container formats such as WARC or WACZ. These can then be rendered and browsed within Preservica. The replay functionality was also extended to work with web-archiving files in the supported formats sourced from outside of Preservica.

The publications at hand use the same file formats as websites but were intended to be viewed offline, from the user’s computer. Like web pages, they contain lots of different elements-- text, video, audio, images and maps-- that together create an interactive user experience. A user can browse and access the content of the publication using a web browser without an internet connection.

For our purposes, they are basically offline websites and could therefore be conceptually treated as such. If we could turn these offline publications into “harvested websites”, we should be able to render them in Preservica.

Implementation

Once we realised that this solution may provide an opportunity for improving access, we began to explore how we could transform the loose files into a web-archiving format supported by Preservica. In consultation with our colleagues from the NLA Web Archiving team, we identified WARCIT, a free, open-source tool developed as part of the Webrecorder suite of tools, as the conversion tool.

WARCIT is a Python program that takes a payload, e.g. a structured HTML fileset, and converts it into a single WARC file that allows replicating the original internal structure and supports the links and interactivity between its elements. Once the WARC is ingested into Preservica, all that is needed is a metadata element that directs it to the publication’s starting page. Then, in theory, Preservica can replicate the experience of using the original CD-ROM.

Or so we thought.

Although Preservica was able to render the publications, we quickly discovered that many did not function as intended. Missing images, broken links, and non-operational features were common, sometimes leaving the publication incomplete or even unusable.

Identifying the causes

After detailed investigation, we identified that Replay Webpage, an open-source renderer used by Preservica, requires exact matches between file names and references in the HTML and couldn’t handle exceptions caused by file-name discrepancies (e.g. capitalisation, underscores) or character-encoding; and file-path discrepancies due to incorrectly formed relative links.

These exceptions can sometimes be introduced in Python during the processing of a WARC but sometimes originate on the carrier.

Replay Webpage also struggles to execute some JavaScript-based functions which affect things like navigation menus, pop-ups, search tools, and embedded multimedia.

Initially, we addressed these issues manually: identifying broken elements, correcting filenames or links, and repackaging the WARC to test each fix. Given that a single HTML publication can contain thousands of files and even more links, this was slow, error-prone, and unsustainable.

To accelerate the process, we began creating small Python scripts to automate repetitive tasks, like searching all HTML files for specific strings, replacing characters in filenames, changing file extensions, or normalising case. These scripts significantly reduced the workload but had two major limitations:

-

They could inadvertently make incorrect changes, introducing new problems.

-

We still had to first “manually” scan each site to identify if there were issues, and what they were, before we could determine the appropriate scripts to run.

Using AI to develop targeted fixes

One of the more difficult challenges involved removing special characters and handling the encoding problems they caused-- a task that exceeded our existing programming expertise. With our organisation encouraging exploration of Copilot in daily workflows, we decided to see if it could help.

By describing the problem and desired outcomes to Copilot, we interactively developed a script that performed the necessary corrections accurately and selectively. It took several iterations of testing and refinement, but eventually it worked exactly as intended. At Copilot’s suggestion, we added functionality to generate a detailed report of all changes-- an idea that proved invaluable for quality assurance.

This success prompted us to expand the same approach to other recurring issues. We developed new scripts capable of scanning entire HTML packages to detect and correct discrepancies in filenames, folder names, path references, and encoded characters. Over time, these improvements developed into a more comprehensive workflow.

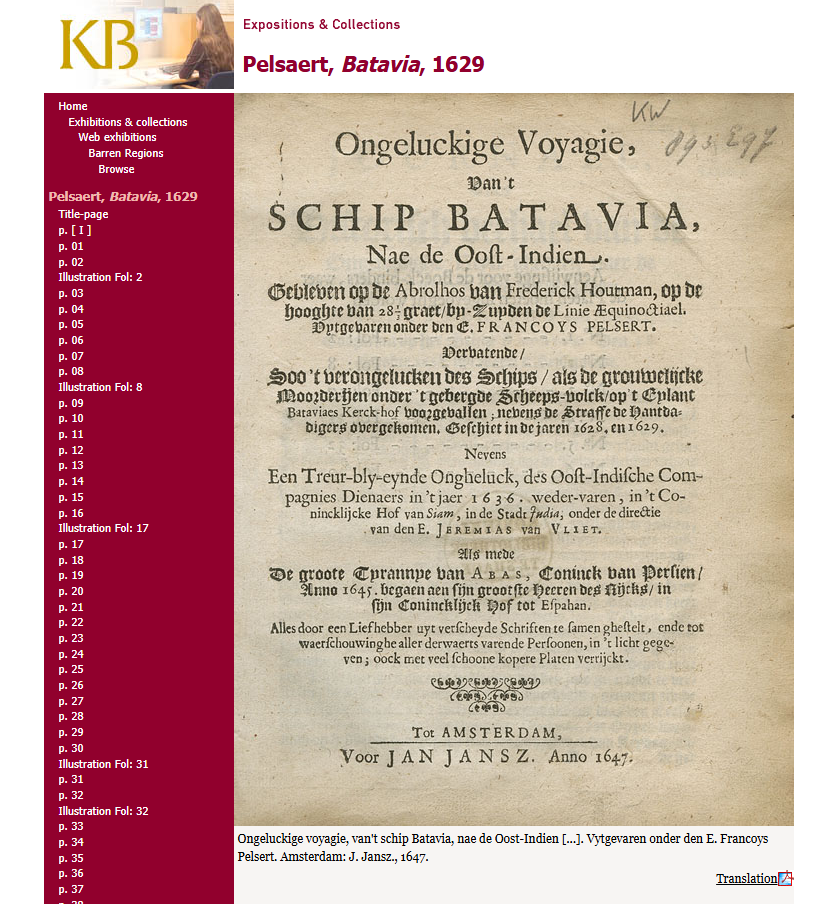

An incompletely rendering page The same page after applying fixes

Creating an interactive quality-controlled process

To ensure reliability, we built an interactive version of the script that can run iteratively. On the first run, it checks for issues and allows us to review and approve any potential changes before applying them. Subsequent runs identify any new issues introduced by earlier corrections. This transformed a process that once took hours into one that can be completed in minutes, with far greater consistency and accuracy.

While we still perform some limited spot-checking to catch unexpected edge cases, the overall workflow is faster, more reliable, and produces higher-quality outputs.

Tackling more complex problems

With foundational issues largely resolved, attention has now turned to more complex problems — particularly those involving unsupported JavaScript functions or unsupported media formats. For each problem encountered we use our own skills and knowledge to identify the cause and determine the solutions. We then work with Copilot to create fixes that can be applied to individual publications before creating a script that can identify issues and create solutions that will work for different variations of the problem.

Our long-term goal is to combine these targeted solutions into a single “master script” capable of scanning a publication for multiple problem types, suggesting and applying the appropriate fixes according to its individual quirks. It’s an ambitious goal — but not an impossible one.

Progress and next steps

Approximately 400 CD-ROM publications were identified for this initial phase. In the first two months, while fitting them in around other tasks, two staff members have processed, packaged and ingested 210 WARCs. We have put aside some more complex ones such as those including Flash. These will be dealt with in a subsequent phase.

Rendering of a converted CD-ROM in Preservica

We started with items from our published collections which pose fewer complications in terms of access restrictions or copyright, but the same method can be applied to other types of material, e.g. downloaded web pages or donated websites that we often see in our archival collections. Importantly, this approach also ensures future-proof preservation that is consistent with other parts of the collection and aligned with broader NLA collection practices, including our extensive web archiving program.

Another benefit of this work is that ‘cleaning up’ the loose files into WARCs helps to declutter the ‘level of support’ report, giving us better visibility of other issues in our collection that are no longer being obscured by the large numbers of files in various formats from the HTML publications. This will help us continue assessing and prioritising additional candidates for preservation action, with the goal of making all content in Preservica accessible to NLA users.

Conclusion

This work represents an exciting new chapter in our digital preservation journey: enhancing access to our collections, the last missing piece of the end-to-end workflow. It will allow us to make these HTML publications accessible to users faster and more easily than before. It will create high impact and visibility for the Library’s digital preservation work both internally and externally. This delivers real outcomes to our users, aligned with the Library’s corporate priorities.

Comments