Emily Monks-Leeson, Russell White and Kevin Palendat from Library & Archives Canada.

On September 20, 2021, Canada held its 44th federal election following a 36-day campaign. As Canadians turned to their preferred news and social media outlets, Library and Archives Canada's Web and Social Media Preservation Program began crawling and preserving election-related websites as events unfolded online. This was the sixth election LAC had captured through web archiving, and when it comes to the dynamic nature of such a task, we’ve found that frequency is a friend. We had a background of knowledge and experience to draw on, dating back to 2006 when we archived a modest 21 websites totaling 23 GB of data. Our efforts have intensified with each election to the point where, in 2021, we have crawled over 300 seed URLs totaling around 1.5 TB of data (and counting).

Selecting Content

One of the most challenging aspects of elections web capture at LAC has been seed selection. Accordingly, each election cycle has prompted greater standardization of our collection processes. To ensure that we’re prepared for a writ drop, we research political websites on an ongoing basis and rely on past seed lists as a guide and starting point for curation. We categorize web content by subject or theme, e.g., “federal government” or “official party sites,” and assign these to the appropriate staff member in order to avoid duplication of effort. Collaboration with other LAC units is also key; subject matter experts in LAC’s Private Archives Branch have helped us identify and prioritize the web content of MPs and Cabinet Ministers whose records LAC has sought to acquire.

Trends & Challenges

Our collections demonstrate an evolution in how political information is disseminated on the web, most notably the shift away from blogs and personally maintained websites and toward social media. Social media platforms tend to change their architecture frequently, which makes them more difficult to capture than “standard” websites and poses all sorts of rendering and format challenges downstream. Twitter stands out as being relatively preservation-friendly in 2021 compared to other platforms, a fact reflected in the number of Twitter accounts we crawled (42). Facebook and Instagram were largely omitted from 2021 harvesting and YouTube crawls were restricted to a limited number of political party and MP accounts (24 in total). The technical realities associated with social media preservation are widely felt in web archiving circles, as they do affect the scope and depth of collections. There are excellent tools designed to tackle this complexity at both the point of crawling and replay, but they are challenging to deploy at scale.

Quality Assurance

Once our web crawls are complete, we conduct quality assurance on the captured content to make sure it meets our expectations and matches the live content as faithfully as possible. Quality assurance is time-consuming work, but it is an important step if we want to maintain the integrity of a website and preserve its look, feel, and functionality at a particular point in time. A typical web crawl will harvest thousands of digital objects (html, CSS, images, video, JavaScript, pdfs, etc.) but sometimes objects may be missing from a capture. The main goal of quality assurance is to identify these missing elements so that we can “patch crawl” the targeted websites and fill these gaps in the archive.

Elections unfold dynamically on the web, with content appearing and disappearing at a rapid pace. New sites spring up during the campaign and some go dark once the election is over. This means we have to approach quality assurance with speed and agility or we risk losing access to the resources we aim to preserve.

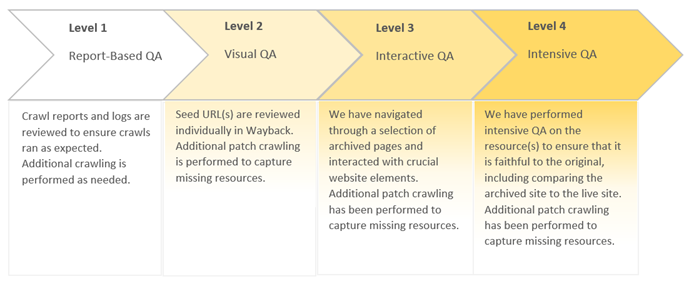

To help us manage QA within a constrained timeframe, we have developed a QA framework that allows us to assign a QA effort level on a 1-4 scale to each seed URL on our crawl list based on the seed’s priority, complexity, and likeliness of changing.

By leveraging these pre-built curatorial and quality assurance frameworks, monitoring the “political web” on an ongoing basis, and appealing to past experience, LAC’s Web and Social Media Preservation Program will be ready for the next election.

Comments