Conor Lynott is a Digital Intern at the National Library of Ireland.

The Problem We Faced

This blog post is an abbreviated version of a longer report that I wrote as part of my National Library of Ireland Digital Internship. This report was written as part of the wider effort to preserve the Census of the Heart project.

Census of the Heart was a project that was led by Mari Kennedy and Kathy Scott. This project was an alternative to the 2016 census and it aimed to capture the emotional state of the nation, rather than traditional data about the demographics of Ireland. The National Library of Ireland is proud to preserve this unique dataset as part of our ongoing commitment to preserving the digital memory of Ireland. The preservation of memory is a key part of the National Library of Ireland’s recently launched 2022-2026 Strategy – available here: https://www.nli.ie/en/reports-and-policy-documents.aspx

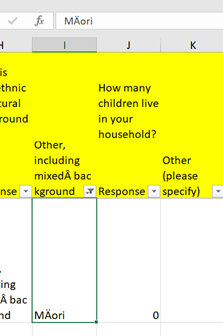

We acquired the collection in the form of two spreadsheets, one for the Irish language answers, and one for the English language answers. The data in the spreadsheets was sourced from a SurveyMonkey questionnaire. Shortly after our receipt of the Excel spreadsheets from the donors, we discovered that there were numerous characters with text encoding issues. An example is included in the screenshot below:

However, the occurrence of these characters in the spreadsheet was not random. Very often there were many repeated occurrences of the same phrases, with the encoding issues appearing in the same place within the phrase. The donors confirmed that they were experiencing the same issues. It was likely that the issues occurred when either exporting the data from SurveyMonkey, or when transforming the CSV into XLS. We attempted to acquire earlier versions of the spreadsheet prior to any transformations, but the donated material were the only available copies.

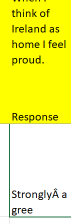

The following example, which contains an encoding issue, occurred 20,519 times in the spreadsheet. This occurrence is shown in the screenshot below.

“Strongly agree”

However, there were phrases that occurred fewer times or only once. Due to the large number of characters, rows and columns, we needed to find a time-efficient way to detect and fix the encoding issues.

Finding a Solution

We set about trialing different solutions that we felt could detect and fix the encoding issues, and arriving at the best solution through the process of elimination. Firstly, we considered the Excel Clean Function. However, upon researching the purpose of this function and how to use it, we decided that the function was not something that would be useful for us. The clean function is designed to simply clear unwanted data and it does not fix encoding issues.

Secondly, we used Excel filters. We hoped that, if the filter detected all the encoding issues, find and replace would allow us to efficiently ensure that the entire spreadsheet did not contain the errors. Unfortunately, this was only partly successful. While the filter was effective at detecting repeated examples of non-text characters - 7,978 examples of “selfâ€employed”, for example - it was not very good at picking up problematic characters which occurred rarely, particularly those present in a long line of normal text when survey participants wrote detailed testimony. For example, the term “MÄori” only occurred once in the spreadsheet.

The Solution

To fix the spreadsheet comprehensively, without errors, we decided to change the encoding of the spreadsheet. This was not possible with an XLS file and, consequently, we created a CSV version. We then changed the encoding using Notepad++ in the CSV file to ANSI. We suspect that the reason for the encoding issue was a mismatch between ANSI and UTF-8 encoding and decoding.

As a final method of QA, we used regular expression searching in LibreOffice. This regular expression,”[^\x00-\x7F]+”, was successful in detecting the encoding issues prior to the ANSI conversion. After performing the conversion to ANSI, the regular expression did not detect any issues.

We intend to preserve the original spreadsheets as well as the internally created CSV file with the correct encoding.

Discussion and Conclusion

I believe this project showed the importance of having some technical knowledge of how encoding issues affect the display of content - particularly when first accepting content from donors. This technical knowledge includes the knowledge of the relationship between file formats and file encoding, as well as the avoidance of encoding mismatches in files.

In this regard, the Digital Preservation: Novice to Know How Course really helped me to understand the basics of files, file units, file storage and encoding. The course helped me to understand how file types work and, therefore, to understand a potential problem and solution. This was a great opportunity to develop the skills associated with a specific pre-ingest activity.